We seem to be experiencing a minor revolution in audio product testing. For the last two decades, audio product testing has been almost entirely subjective, rarely based on anything more than the opinion of a single listener, formed in uncontrolled, sighted tests. Until recently, SoundStage! was one of only a few audio publishing outlets presenting controlled, objective testing—specifically, audio measurements. But recently, measurements have become more common on websites, online forums, and YouTube. As someone who, since the late 1990s, has been nagging for more audio measurements in reviews, I should be happy about this—and I am, but it has me concerned, too.

The reasons for the renewal of interest in audio measurement are the rapid growth of free or low-cost measurement gear, such as Room EQ Wizard and the miniDSP EARS, and the relatively recent development of science-based standards such as the Harman curve for headphone measurements, CTA-2034-A for speaker measurements, and CTA-2010 for subwoofer output measurements.

Those are all welcome developments, but as I simultaneously celebrate and lament the 30th anniversary this month of my first published audio product review (of the AudioSource SS Three surround decoder/amplifier, for Video magazine), I can’t help recalling the numerous times when I and my colleagues have put too much faith in measurements, trusting that we didn’t need to devote time to hands-on evaluation because the numbers told us everything we needed to know. I’d like to share those tales with you, with the caveat that all data included below are to the best of my memory.

Episode 1: the laserdisc players

This first event occurred early in my career, which began just as the first video products with digital signal processing (DSP) started to hit the market. Before that, we could safely judge most video products almost entirely by their measured resolution and signal-to-noise (S/N) ratios. But while many of the new products with DSP had very high S/N ratios, some also produced digital artifacts that the eye could easily detect but the measurements could not.

For example, in one of the first blind product comparison tests I conducted, we rounded up four leading laserdisc players in the $1200 (all prices USD) price category, all of which used DSP. As high and low anchors, we chose the Pioneer LD-S2 (a $3500, DSP-equipped reference player that had the highest S/N ratio our technical editor, Lance Braithwaite, had ever measured) and a $400 garden-variety Hitachi player (actually a rebadged Pioneer design). We expected all four of the $1200 players to beat the Hitachi, and the LD-S2 to beat the $1200 players.

Sure enough, the LD-S2 beat all the $1200 players—but to our amazement, the analog Hitachi player beat them all. As I recall, it delivered better subjective resolution because it didn’t have the image-softening digital artifacts the others did. And as we’d already started to realize, most of the laserdisc players on the market had signal-to-noise ratios high enough that the noise wasn’t visible, so higher S/N ratios didn’t matter—just as you can’t hear the difference in noise between audio products with S/N ratios of, say, 90dB and 120dB. We later encountered similar experiences with camcorders, where products that measured a few dB better sometimes produced a visibly inferior picture.

Episode 2: the subwoofers

I encountered a similar situation a few years later, around 1998, when I tested a half-dozen high-end subwoofers for Home Theater magazine. At the time, the most—and really only—significant measurement of a subwoofer was considered to be its frequency response, which was usually measured by putting the measurement microphone about 1/4″ from the woofer (and if applicable, the port or passive radiator, and then summing the results). This measurement had to occur at a very low signal level, or it would push the microphone into distortion.

By this measurement, the best subwoofer of the bunch was a slim, dual-10″ sealed model from Von Schweikert Audio, which I measured with a -3dB response down to 19Hz. The worst was a 15″ ported model from B&W (in the decades before and after the company spelled out Bowers & Wilkins), which had -3dB response down to just 30Hz. Yet in my blind tests, the panelists raved about the B&W’s deep bass extension and complained that the Von Schweikert didn’t have enough bottom end for action movies.

Baffled by this, I ran every standard test I could think of to explain the difference (including pulling the plate amps out and measuring those), and then I started concocting experimental measurements. I finally got my answer when I used an Audio Precision analyzer to measure the subs’ output in dB versus distortion, at 20, 40, 60, and 80Hz. This measurement lined up perfectly with the listener impressions—the chunky B&W delivered ample output at 10% total harmonic distortion at 20Hz, while the slim Von Schweikert was down about 15dB under the same conditions. It appeared the Von Schweikert sub had been EQ’d with a bass boost to make it flat down to 20Hz (still a common practice with small subs), but its amp and drivers didn’t have the physical capacity to deliver those low frequencies at a useful volume.

The CTA-2010 standard eventually codified subwoofer output measurements, which have become a common component of subwoofer reviews for some publications. But that left a legacy of about two decades’ worth of subwoofer measurements that misrepresented the products’ capabilities—and we still see this misrepresentation on some subwoofer spec sheets.

Episode 3: the consulting gig

Still more years later, I was hired as a consultant by a mass-market electronics company trying to figure out why its audio products were getting such bad reviews. (I was reviewing only high-end speakers and projectors at the time, so it wasn’t a conflict of interest.) Another consultant had set them up with a 100-point performance assessment scale based entirely on measurements, but by reviewers’ judgments and the company’s own admission, competing products that scored only, say, a 65 on that scale sometimes clearly sounded better than one of the company’s products that scored 80.

The frequency-response measurements that the previous consultant had prescribed were fine in general, but they were developed for reasonably high-quality speakers, just as the CTA-2034-A standard was. But with the tiny, bass-challenged woofers used for cheap soundbars, home-theater-in-a-box systems, Bluetooth speakers, and TVs, distortion sometimes becomes the overwhelming consideration. In these products, it’s often a good idea to build in a boost at, say, 250Hz, which can at least create an impression of decent bass response without pushing the driver beyond its limits. It may also be a good idea to high-pass filter the woofers well above the frequency where distortion becomes a problem, and to add a corresponding high-frequency roll-off, which makes the system sound subjectively full and balanced even if there’s little response below about 200Hz.

Traditional speaker measurements would tell you these products are terrible, even if your ears might tell you they’re OK. The solution I recommended was to compare the company’s upcoming products with competing products in blind listening panels, using people outside the engineering and marketing teams. (I don’t know the end of the story because one of the company’s competitors hired me away soon afterward.)

The bottom line

In all of these cases, only by our subjective experiences did we realize that judging the products entirely by the measurements was a big mistake. But subjective assessment is often missing from today’s measurement-oriented reviews. Sometimes the product is simply given a pass-fail grade, and it’s condemned if the measurements don’t hold up to the standards noted above. Or, in the case of electronics, the product might be dubbed a failure purely because it has, say, a worse S/N ratio or more jitter than some competing product, even though the variations we usually see in these measurements have no proven correlation with listener perceptions.

I consider myself lucky to have worked over the decades for several publications that not only paid for my measurements but also were willing to fund the innumerable blind panel tests I’ve conducted. These tests let me put my measurements in perspective, and they’ve taught me how uncertain the results can be when you ask someone to listen to an audio product and tell you what they think of it.

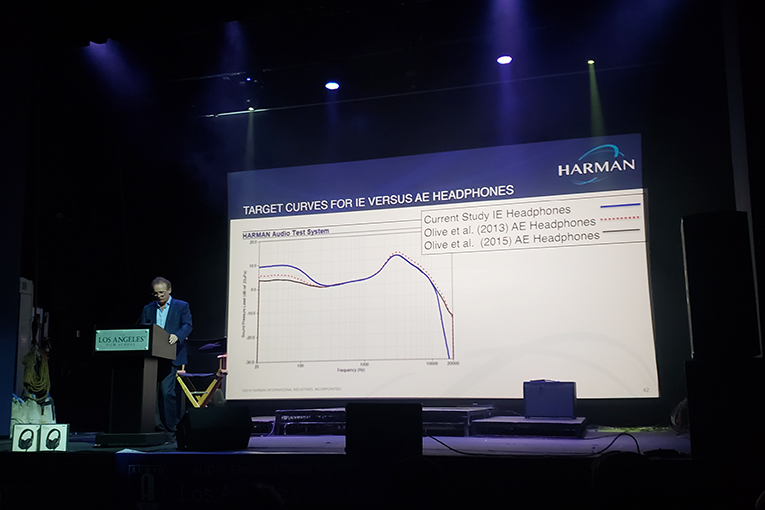

I’m glad we have scientific standards that give us a better idea of how audio measurements correlate with subjective performance assessments. For instance, I’m thrilled that we have the Harman curve as a good general target for headphone response. Yet I rarely see reviewers mention the fact that there are really six variants on the Harman curve: three for headphones, three for earphones. (Read more about that here.) And I know from the years of reader response I’ve received, in comments sections and through e-mail, that many audio enthusiasts prefer a more trebly response, and some prize spaciousness over accurate frequency response.

And what will happen as more headphones incorporate DSP, and engineers put less effort into acoustical design and instead just use the DSP to EQ the headphones to the Harman curve or some other target? Will we see something similar to what happened with video products, where a major change in the way the products were designed introduces new artifacts that our old measurements couldn’t detect?

The only way we can be sure is if manufacturers, reviewers, and audio enthusiasts continue to perform subjective assessments of audio products and don’t put too much trust in the machines.

. . . Brent Butterworth